Group - Funded Projects

Alignment for Foundation Models

Goal: Aligning LLMs and Diffusion Models with Human Preference

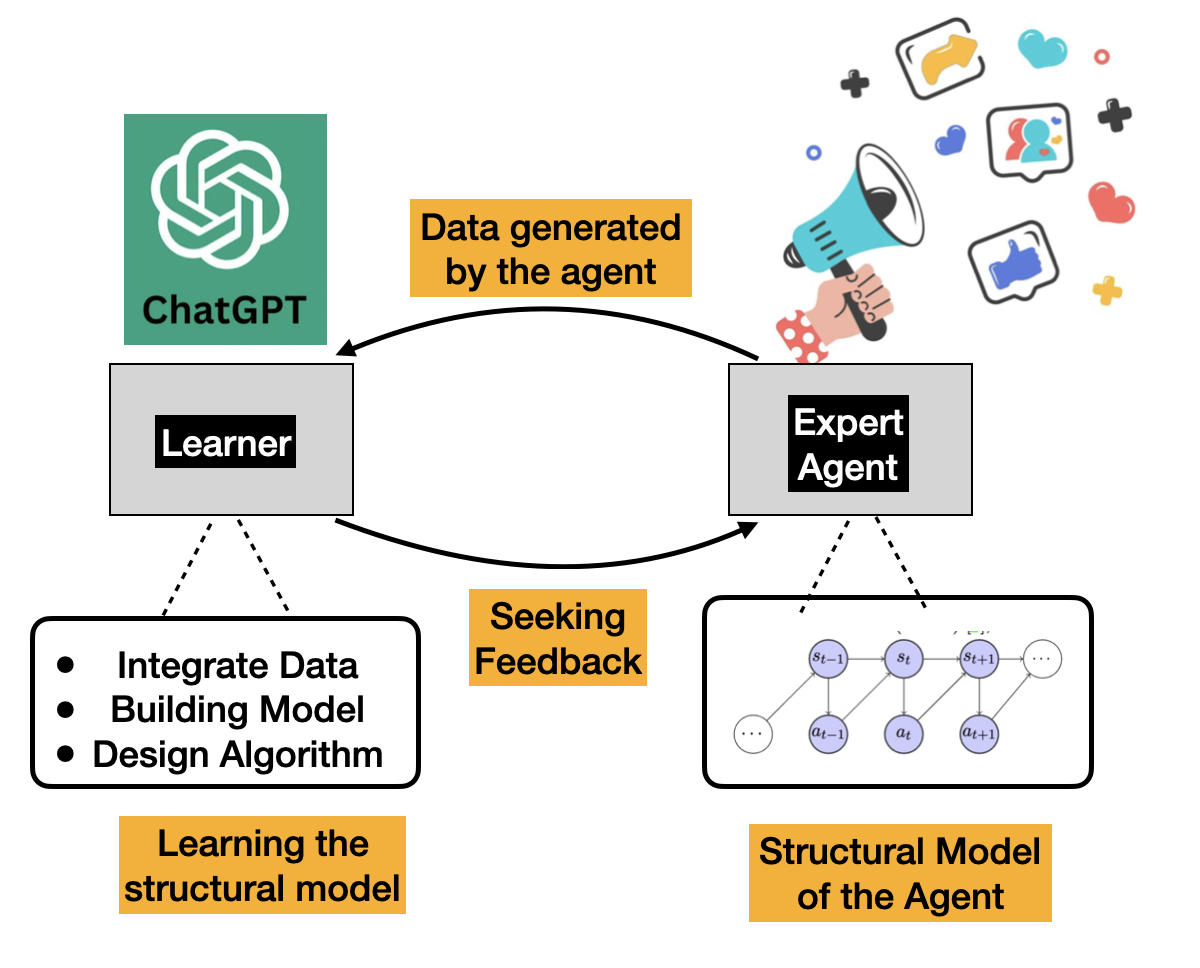

Abstract The alignment problem generally refers to the process of fine-tuning pretrained LLMs using data generated via human feedback, with the goal of aligning the response of LLMs towards what a typical human user would prefer. RLHF is a technique that has recently generated a lot of interest due to its successful application in aligning LLMs with human preference. In RLHF, a group of human writers is asked to answer prompts (demonstration data) and provide rankings on a diverse set of continuations for each given prompt (preference data). Then a supervised fine-tuning (SFT) step is performed by using the demonstration data, a reward model (RM) is estimated by using the preference data, and finally RL is used to further finetune the SFT model. In this work, we propose to design novel alignment algorithms for both LLMs and Diffusion models.

Building Robust Test-to-Image Systems

Goal: Develop Robust Text-2-Image systems

Sponsored by Cisco Research, 2023-2024

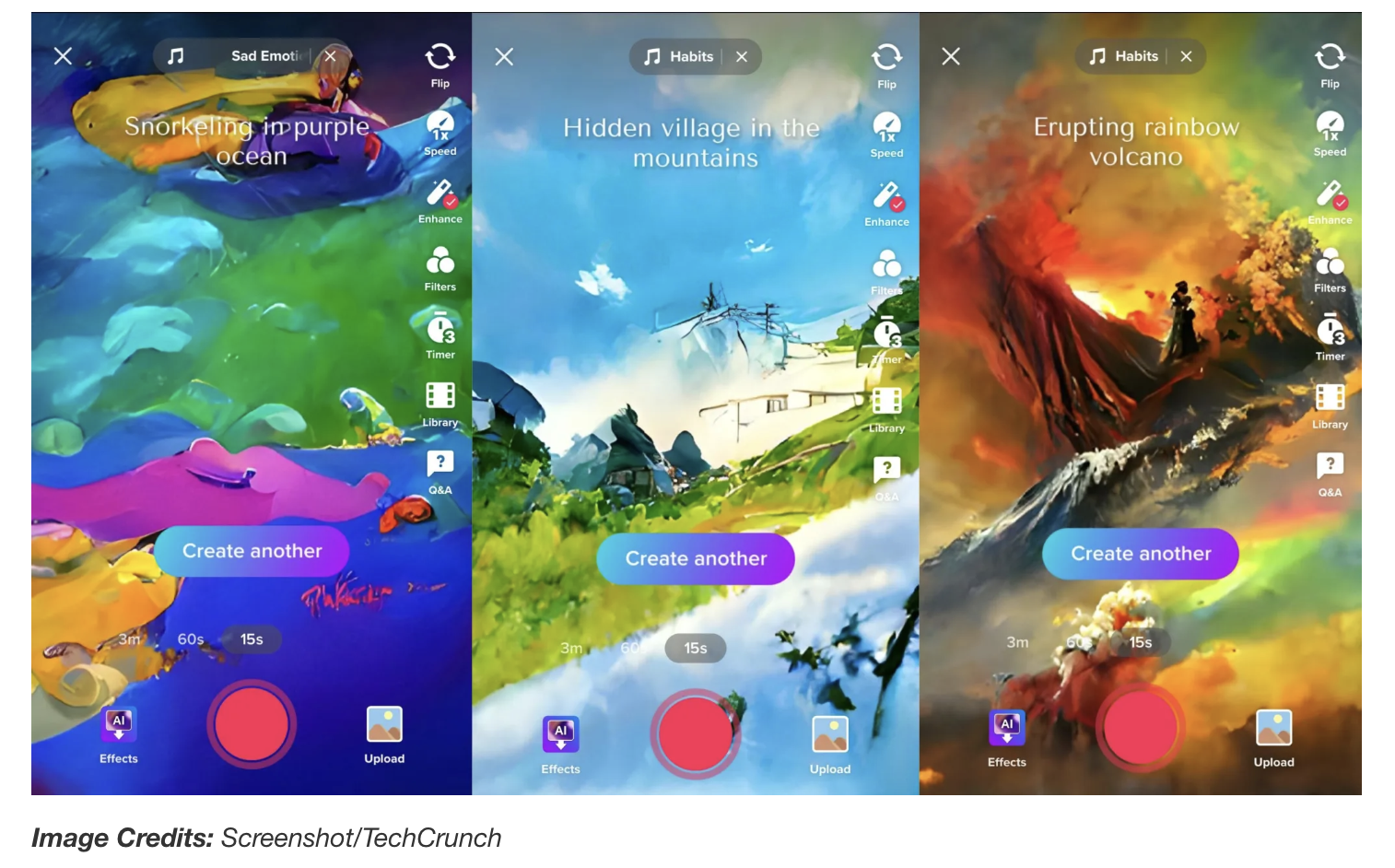

Abstract Considerable attention has been directed towards generative diffusion models, owing to their remarkable achievements in generating realistic samples of complex data, including images, text, and audio. One important class of such models are the Text-to-Image (T2I) models such as Stable Diffusion, which generate realistic images from natural language prompts. T2I systems find applications across domains such as computer vision, art, and medical imaging. For instance, they can be used to craft visual artwork from text inputs. The potential of these models has led to their incorporation into various products (e.g., in graphic design).

However, the increased popularity of T2I systems also comes with growing concerns about its misuse, either unintentional or with malicious intent. For instance, an innocent typo in textual prompt can result in a generated image that is different than expected. Most importantly, there is the risk of a malicious misuse of the system, wherein textual prompts are manipulated to elicit specific outputs that could be offensive or harmful, such as racist content. Therefore, it is important that along the effort to improve and deploy these systems, we also develop methods to mitigate the potential effects of such misuse.

A Multi-Rate Feedback Control Framework for Modeling, Analyzing, and Designing Distributed Optimization Algorithms

Goal: Develop comprehensive decentralized algorithms from optimal control perspective

Co-PI: Nicola Elia

Sponsored by NSF, 2023-2026

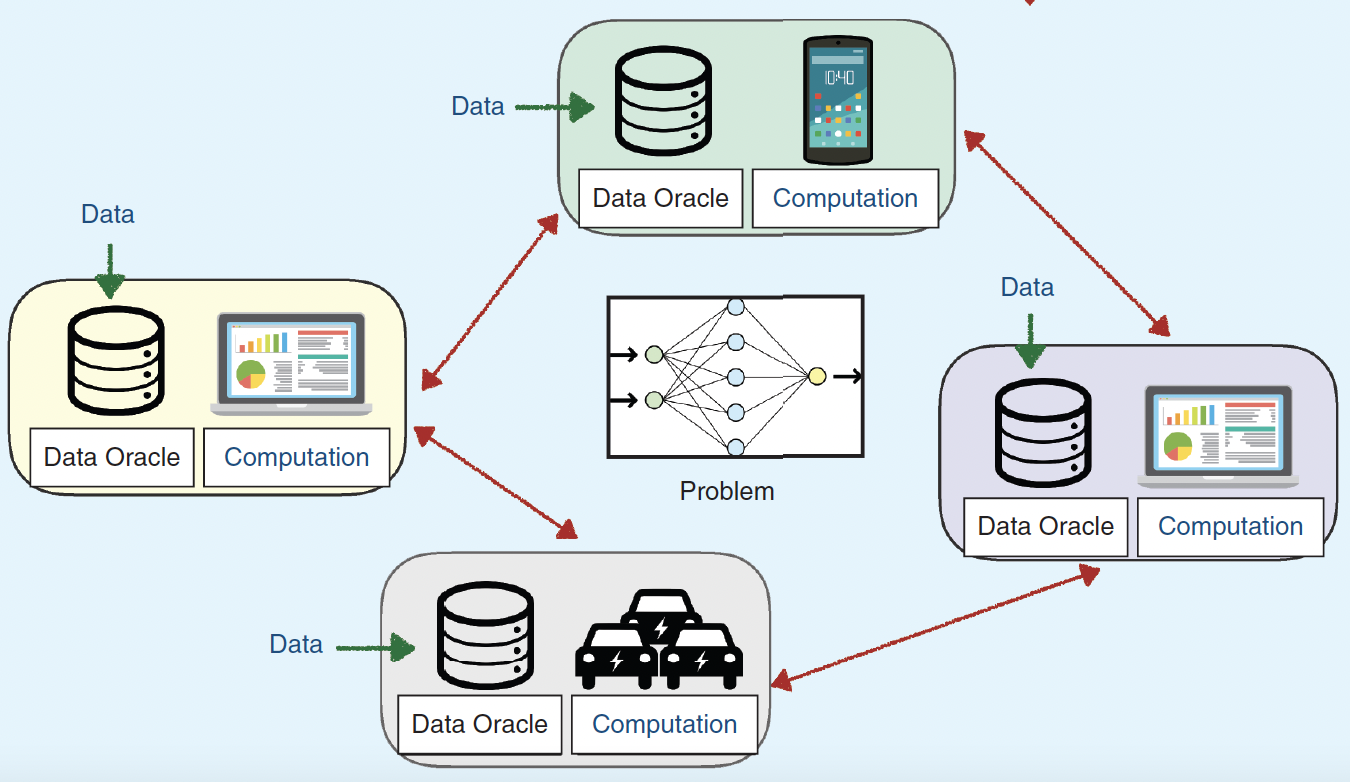

Abstract Distributed systems hold great promise to realize scalable processing and real-time intelligence required by our modern life. However, despite extensive research in distributed algorithms and systems, several challenges persist in their synthesis and application. In particular, the current algorithm design process is not scalable, since one needs to design a new algorithm and develop the corresponding analysis for each specific application scenario (e.g., federated learning) with a specific set of requirements (e.g., communication efficiency + privacy). There has been an urgent need to unify various subclasses of distributed algorithms, so to provide insights, and streamline the design and analysis.

In this work, we advocate the a generic “model” of distributed algorithms (based on techniques from stochastic multi-rate feedback control), which can abstract their important features (e.g., privacy preserving mechanism, compressed communication, occasional communication) into tractable modules. Building upon these abstract models, we then design a framework which has a superior modeling power to encompass a substantial class of distributed algorithms. Such a framework can be used to analyze the entire algorithm class, but more importantly, it helps streamline the design of new algorithms, in the sense that features arising from different application domains can be easily integrated.

Bridging the Algorithm-Architecture Gap for Internet-of-Things Technologies

Internal Digital Technology Initiative Seed Grant, 2018-2019

Goal:Develop practical distributed algorithms implementable on bespoke computer processors

Co-PIs: Mingyi Hong, John Sartori and Sairaj V. Dhople (ECE Department, UMN)

Abstract:

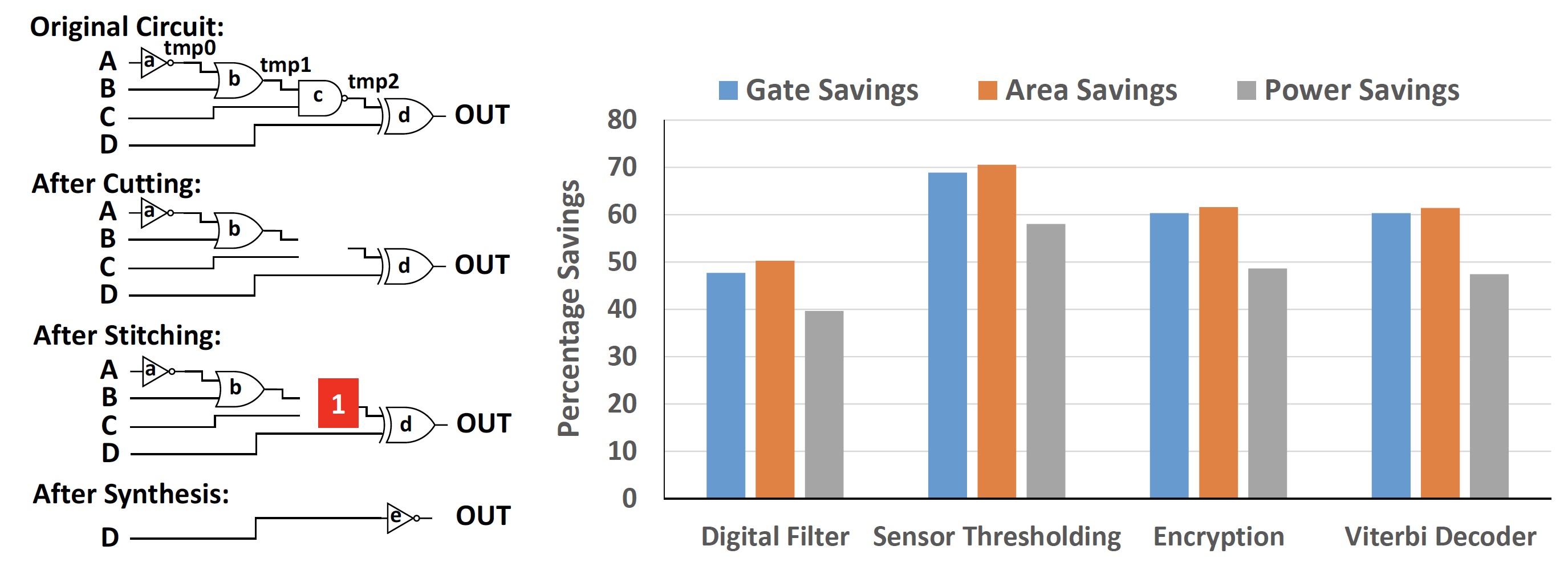

Through this project, we aim to bridge the gap between numerical algorithms and computer architectures in Internet-of-Things (IoT) technologies, with distributed energy resources (DERs) serving as the application backdrop. While the literature on distributed algorithms for monitoring, management, diagnostics, and control of DERs covering applications such as coordinating fleets of electric vehicles, managing energy-storage devices, and regulating power quality in distribution networks is extensive; solution strategies for these algorithms are seldom cognizant of area and power constraints of the microcontrollers and microprocessors that eventually house them. This disconnect manifests in an unfortunate barrier between paper and practice that, in the state-of-the-art, is ultimately resolved with stripped-down versions of algorithms that do not offer performance guarantees of original renditions or costly, conservative, and power-hungry architectures that cannot meet the ultra-low power and area constraints that are essential in the IoT domain. Our research agenda focuses on a class of optimization problems germane to DER applications and extracts fundamental correlations between these algorithms to area and power requirements for bespoke computer processors. This will allow us to significantly improve area and energy efficiency of IoT nodes without sacrificing performance or functionality, thus maintaining the guarantees provided by the algorithms as designed. While the application in this proposal pertains to the critical infrastructure of power networks, successful project completion will outline a replicable design methodology that is cognizant of foundational links between algorithms and architectures, ensuring broad translational impact to the wider IoT ecosystem.

Decomposition Framework for Non-convex Nonsmooth Optimization with Applications in Data Analytics

NSF, Grant No. CMMI-1727757, 2017-2021

Goal: Design non-convex algorithms with strong theoretical guarantee for a number of data analytics problems.

Abstract: Rapid advances in sensor, communication and storage technologies have led to the availability of data on an unprecedented scale. Depending on the source, these data may represent measurements, images, texts, time-series and a variety of other formats. Significant challenges remain in translating the increasing amount of data to useful and actionable information. The objective of this project is to address this information dilemma through the lens of modern large-scale optimization. This project supports research on methods to effectively process large-scale, unstructured, complex data so as to be usable in applications such as bioinformatics, smart energy systems, manufacturing, and healthcare. The project will also engage graduate students in the research activities and will support outreach to undergraduate STEM students through an existing program at the PI's university.

This project will focus on the construction of a general optimization and computational framework that enables a number of promising but challenging large-scale data-intensive applications. The research comprises two major thrusts. The first will build and analyze a novel optimization-based primal-dual decomposition framework that transforms a large, tightly coupled, non-convex problem into a sequence of independent subproblems solvable by parallel machines. The second applies the decomposition framework to a number of important emerging data-intensive applications, including high-dimensional clustering, topic modeling, and robust high-dimensional regression. Fundamental questions, such as optimality, convergence rates, and scalability in high dimension will be investigated. The project will test the developed methods using data from two important energy applications: smart energy meters and real-time residential photovoltaic inverters.

Optimal Provision of Backhaul and Radio Access Networks: A Cross-Network Approach

NSF, Grant No. CCF-1526078, 2015-2018

Goal: Provide theoretical insights and design algorithms for optimal provisioning of large-scale radio access network.

Abstract: The explosive growth in the number of smart consumer devices leads to projections that within 10 years’ time, wireless cellular networks need to offer 1000x throughput increase over the current 4G technology. By that time the network should be able to deliver fiber-like user experience boasting 10 Gb/s individual transmission rate for data intensive cloud-based applications. To move such a huge amount of data from the network to the users’ handheld devices in real time, revolutionary network infrastructure and advanced network provision are required. Two key enablers of the envisioned future mobile networks are the ultra-dense deployment of base stations and centralized cloud-based processing. This project addresses the challenging problem of managing such densely deployed, cloud-based radio access networks.

The proposed research includes the introduction of a unified cross-network framework to manage a cloud-based radio access network. Important aspects of resource management in different sub-networks, including the backhaul and the cloud networks, will be considered. The project focuses on providing theoretical insights as well as designing practical algorithms for the optimal provisioning of a cloud-based radio access network. Fundamental computational issues of key cross-network resource management tasks will be investigated, revealing their intrinsic complexity. Moreover, practical schemes capable of optimally utilizing resources across networks will be developed, using cross-network optimization formulations. These algorithms will be optimized to fully exploit the computational resources offered by the cloud centers, addressing key issues such as parallel/distributed implementation, asynchronous computation, and load balancing. The goal is to determine how computational resources should be deployed through the proposed cross-network framework to dramatically improve the performance of a cloud-based radio access network.

[Related Publications and Codes]

Mechanism Design for Complex Systems: A Black-box Model Approach

AFOSR, Grant No. 15RT0767, 2015-2019

Goal: Investigate fundamental research questions of mechanism design with a “black-box model”

Abstract: Many complex resource allocation problems require vast amounts of distributed input information, which is proprietary to stakeholders. A resource allocation of this kind – with incomplete information about stakeholders" private information – is an optimization problem with a game-theoretic twist: a stakeholder may misrepresent their input information if this course of action is to her/his own gain. This kind of behavior results in a highly suboptimal allocation of resources. Designing rules of interaction between the resource allocator and the stakeholders (players) so that an optimal allocation can be implemented (without the possibility of input manipulation) is the overarching goal of mechanism design.

|

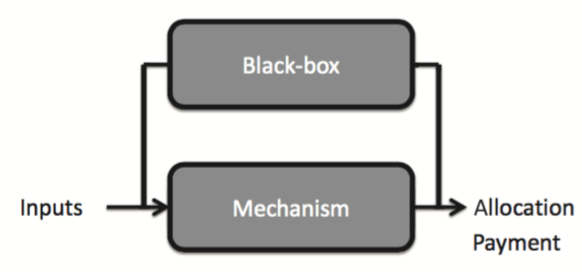

An optimal mechanism design is a game whose rules are designed by the resource allocator so that in equilibrium the desired outcome (i.e. optimal allocation) is obtained. A mechanism is said to be incentive compatible (IC) if it is in each player“s best interest to report input private information truthfully. In large-scale complex engineering systems, an additional layer of complexity pertains to the case in which there is no closed-form analytical expression for evaluating different resource allocation strategies. In many complex resource allocation problems this evaluation can only be achieved by repeatedly running a simulation model. This is akin to sequentially querying an ”oracle“ or ”black-box". Hence, in this context a mechanism design must interact with a black-box model as represented Figure. |

To illustrate a concrete application of the problem of mechanism design with black-box interaction, consider the allocation of National Airspace System“s (NAS) capacity pursuant to disruption such as severe weather incident that reduces available capacity. The short-term imbalance in route demands versus capacity must be solved within a short time-span. To maximize allocation efficiency, the NAS manager may direct some airlines” flights with lower costs associated to delays to incur a ground-delay and thus allocate limited routing capacity to other airlines“ flights with higher delay associated costs. This type of resource management strategy relies on (i) complete information on the different airlines” preferences regarding delay and (ii) the ability to evaluate non-linear tradeoffs in the allocation of available routing capacity. In practice, the network resource manager does not have complete information on the different airlines" delay associated costs. Nor does it have access to a closed-form analytical expression for evaluating capacity tradeoffs.

This motivates us to investigate fundamental research questions of mechanism design with a “black-box model” for evaluating different allocation strategies. In the proposed research we consider a design that takes the form of an iterative auction. In such auction the players (stakeholders) are asked to report desired levels of capacity at each round and the auctioneer checks the feasibility of the resulting allocation by consulting a “black-box” and updates prices accordingly.

[Related Publications and Codes]

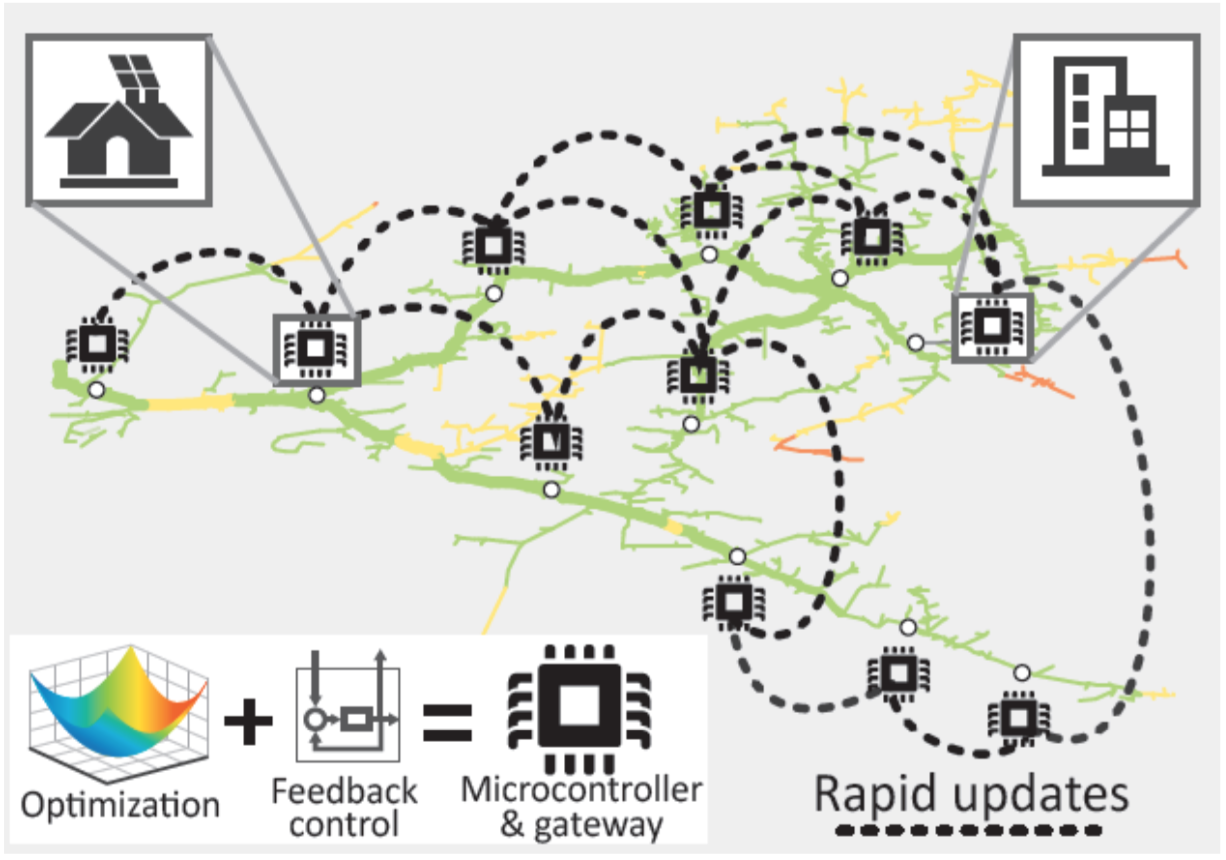

Distributed inverter controllers seeking reliability and economic-optimality of photovoltaic-dominant distribution systems

DOE NREL, LDRD Program, 2015-2017

Goal: Develop innovative distributed controllers for efficient PV-inverters operation.

Abstract: Increased behind-the-meter photovoltaic (PV) capacity has precipitated a set of distribution-system-level challenges that impede the widespread adoption of PV systems. For example, reverse power flows due to PV-generation exceeding load demand increase the likelihood of voltages violating prescribed limits, while fast-variations in the PV-output tend to cause transients that ultimately lead to wear-out of legacy switchgear. Recent academic and industrial efforts have developed distributed optimization and control approaches to distribution-system management that are inspired by – and are adapted from – legacy methodologies and practices in bulk power systems; however, these approaches are not compatible with the form and function of distribution systems with high PV penetration, and therefore do not offer a way to systematically address emerging efficiency, reliability, and power-quality concerns. Accordingly, the objective of this project is to develop an innovative distributed PV-inverter control architecture that: 1) maximizes PV penetration while congruently optimizing system performance; 2) bridges the temporal gap between real-time control and steady-state optimization to enable unprecedented adaptability to fast-varying irradiation and load conditions; and, 3) seamlessly integrates control, algorithms, and communications systems to create the next-generation cyber-physical architecture that is required to support distribution-grid operations. While heuristics and various other methods proposed in the literature are not grounded on solid analytical foundations, the present project will develop distributed controllers with strong and universal theoretical claims regarding convergence, stability, and economic optimality. The proposed solutions will be validated using a comprehensive and tiered approach of software-only simulation and hardware-in-the-loop (HIL) simulation. Arduino boards will be utilized to create and embed the proposed optimization-centric controllers into next-generation gateways and inverters.